Back in 1995, Shlomo Peller founded Rubidium in the visionary belief that voice user interface (VUI) could be embedded in anything from a TV remote to a microwave oven, if only the technology were sufficiently small, powerful, inexpensive and reliable.

“This was way before IoT [the Internet of Things], when voice recognition was done by computers the size of a room,” Peller tells ISRAEL21c.

“Our first product was a board that cost $1,000. Four years later we deployed our technology in a single-chip solution at the cost of $1. That’s how fast technology moves.”

But consumers’ trust moved more slowly. Although Rubidium’s VUI technology was gradually deployed in tens of millions of products, people didn’t consider voice-recognition technology truly reliable until Apple’s virtual personal assistant, Siri, came on the scene in 2011.

“Siri made the market soar. It was the first technology with a strong market presence that people felt they could count on,” says Peller, whose Ra’anana-based company’s voice-trigger technology now is built into Jabra wireless sports earbuds and 66 Audio PRO Voice’s smart wireless headphones.

“People see that VUI is now something you can put anywhere in your house,” says Peller. “You just talk to it and it talks back and it makes sense. All the giants are suddenly playing in this playground and voice recognition is everywhere. Voice is becoming the most desirable user interface.”

Still, the technology is not yet as fast, fluent and reliable as it could be. VUI depends on good Internet connectivity and can be battery-draining.

We asked the heads of Israeli companies Rubidium, VoiceSense and BeyondVerbal to predict what might be possible five years down the road, once these issues are fixed.

Here’s what they had to say.

Cars and factories

Rubidium’s Peller says that in five years’ time, voice user interface will be part of everything we do, from turning on lights, to doing laundry, to driving.

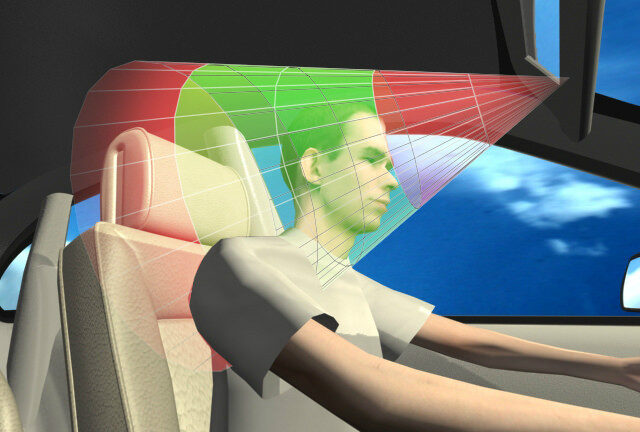

“I met with a big automaker to discuss voice interface in cars, and their working assumption is that within a couple of years all cars will be continuously connected to the Internet, and that connection will include voice interface,” says Peller.

“All the giants are suddenly playing in this playground and voice recognition is everywhere. Voice is becoming the most desirable user interface.”

“The use cases we find interesting are where the user interface isn’t standard, like if you try to talk to the Internet while doing a fitness activity, when you’re breathing heavily and maybe wind is blowing into the mic. Or if you try to use VUI on a factory production floor and it’s very noisy.”

As voice-user interface moves to the cloud, privacy concerns will have to be dealt with, says Peller.

“We see that there has to be a seamless integration of local (embedded) technology and technology in the cloud.

“The first part of what you say, your greeting or ‘wakeup phrase,’ is recognized locally and the second part (like ‘What’s the weather tomorrow?’) is sent to the cloud. It already works like that on Alexa but it’s not efficient. Eventually we’ll see it on smartwatches and sports devices.”

Diagnosing illness

Tel Aviv-based Beyond Verbal analyzes emotions from vocal intonations. Its Moodies app is used in 174 countries to help gauge what speakers’ voices (in any language) reveal about their emotional status. Moodies is used by employers for job interviewees, retailers for customers, and many other scenarios.

The company’s direction is shifting to health, as the voice-analysis platform has been found to hold clues to well-being and medical conditions, says Yoram Levanon, Beyond Verbal’s chief scientist.

“There are distortions in the voice if somebody is ill, and if we can correlate the source of the distortions to the illness we can get a lot of information about the illness,” he tells ISRAEL21c.

“We worked with the Mayo Clinic for two years confirming that our technology can detect the presence or absence of a cardio disorder in a 90-second voice clip.

“We are also working with other hospitals in the world on finding verbal links to ADHD, Parkinson’s, dyslexia and mental diseases. We’re developing products and licensing the platform, and also looking to do joint ventures with AI companies to combine their products with ours.”

Levanon says that in five years, healthcare expenses will rise dramatically and many countries will experience a severe shortage of physicians. He envisions Beyond Verbal’s technology as a low-cost decision-support system for doctors.

“The population is aging and living longer so the period of time we have to monitor, from age 60 to 110, takes a lot of money and health professionals. Recording a voice costs nearly nothing and we can find a vocal biomarker for a problem before it gets serious. For example, if my voice reveals that I am depressed there is a high chance I will get Alzheimer’s disease,” says Levanon.

Beyond Verbal could synch with the AI elements in phones, smart home devices or other IoT devices to understand the user’s health situation and deliver alerts.

Your car will catch on to your mood

Banks use voice-analysis technology from Herzliya-based VoiceSense to determine potential customers’ likelihood of defaulting on a loan. Pilot projects with banks and insurance companies in the United States, Australia and Europe are helping to improve sales, loyalty and risk assessment regardless of the language spoken.

“We were founded more than a decade ago with speech analytics for call centers to monitor customer dissatisfaction in real time,” says CEO Yoav Degani.

“We noticed some of the speech patterns reflected current state of mind but others tended to reflect ongoing personality aspects and our research linked speech patterns to particular behavior tendencies. Now we can offer a full personality profile in real time for many different use cases such as medical and financial.”

Degani says the future of voice-recognition tech is about integrating data from multiple sensors for enhanced predictive analytics of intonation and content.

“Also of interest is the level of analysis that could be achieved by integrating current state of mind with overall personal tendencies, since both contribute to a person’s behavior. You could be dissatisfied at the moment and won’t purchase something but perhaps you tend to buy online in general, and you tend to buy these types of products,” says Degani.

In connected cars, automakers will use voice analysis to adjust the web content sent to each passenger in the vehicle. “If the person is feeling agitated, they could send soothing music,” says Degani.

Personal robots, he predicts, will advance from understanding the content of the user’s speech to understanding the user’s state of mind. “Once they can do that, they can respond more intelligently and even pick up on depression and illness.”

He predicts that in five years’ time people will routinely provide voice samples to healthcare providers for analytics; and human resources professionals will be able to judge a job applicant’s suitability for a specific position on the basis of recorded voice analysis using a job-matching score.