Every passing day brings us closer to the utopian dream of human-robot cooperation, collaboration, and cohabitation that was promised to us by all those years ago by “The Jetsons.”

Our cars are learning how to drive themselves, our solar panels are keeping themselves clean, and our vacuum cleaners scuttle around our homes at night and keep our pets from thinking they run the place.

That said, there are still hiccups in that journey: namely, an endless parade of headlining news stories involving words like robot, AI, and, of course, death and/or severe injury.

Just last year, a worker was crushed by a packing robot that mistook him for a box of red peppers, a pedestrian in San Francisco was run over and dragged by a self-driving taxi, and a man in a Texas Tesla factory was pinned and gouged by an automated assembly arm.

Beyond these gruesome and specific instances, it seems like having robots around is simply more dangerous, as evidenced by a recent study showing that warehouses utilizing robots suffer 50 percent more worker injuries.

Add to that the fact that this morning my Roomba tried to eat a shower curtain and set off a chain reaction of chaos that resulted in my toddler’s toothbrush landing in the toilet, and we’ve got a real problem on our hands.

What’s going wrong?

In order to navigate the complexities and nuances of the evolving field of smart robotics, experts must put their minds to work, analyzing what’s been going wrong, figuring out why, and coming up with solutions that are at once practical and effective.

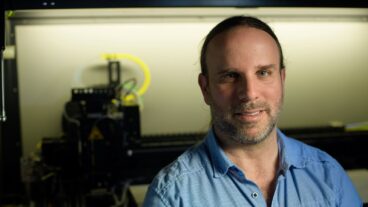

Luckily for my toddler’s dental hygiene, that’s precisely what David Faitelson has been doing.

As the head of the software engineering school at the Afeka Academic College of Engineering in Tel Aviv, Faitelson is an authority in the realm of software engineering and human-machine interaction.

With over three decades of experience, including a master’s degree from the Holon Institute of Technology and a doctorate from the University of Oxford, his expertise includes software quality, design and artificial intelligence.

In a conversation with ISRAEL21c, Faitelson delves into the challenges that must be addressed in order to ensure a future that features robot housemaids but does not feature frequent trips to the ICU due to robot housemaid malfunctions.

Blurred lines, fractured spines

While in the past, there have been very clear-cut rules about how humans can and should interact with robots safely, Faitelson explains that modern developments have blurred that line.

“In the old days, it was very clear that when you had a machine — especially a large, very powerful one — you would put some kind of barrier between the machine and humans,” he says.

“The interesting thing that’s happening now is that we are trying to dilute these barriers to let robots and humans interact much more closely. This presents positive opportunities, but it also presents a certain level of danger.”

He identifies three primary hurdles that must be navigated to foster coexistence between humans and robots, and offers potential solutions for overcoming them.

1. Minimize the chance of novel scenarios

One significant challenge lies in the limitations of current artificial intelligence systems, particularly those grounded in statistical modeling.

Faitelson explains that oftentimes, the errors made by algorithm-driven robots are the result of novel scenarios that are outliers from the machines’ vast datasets.

Faced with an unfamiliar situation, the robot is likely to make its best guess at how to respond — which can often lead to unpredictable and even harmful results.

No matter how hard we try, though, there’s little chance we’ll be able to rule out every possible novel scenario that could throw our machines for a loop.

To resolve this, Faitelson suggests that we simplify a robot’s working environment — whether that be a road, a production line, or a bathroom with a low-hanging shower curtain — as much as possible.

“Make it more controlled; more predictable. You need to redesign the entire system if you want to make it safe, reliable and effective,” he says.

2. Give robots better body language

At present, robots pretty much just do what they plan on doing, the instant they’re supposed to do it, without really letting anyone know ahead of time.

While this is great for efficiency, it’s less great for bystanders within range of rapidly-moving, servo-driven steel.

Faitelson underscores the importance of establishing clear channels of communication between humans and robots, so that each is aware of the other’s presence and intentions.

Drawing inspiration from human-animal interaction and non-verbal cues in dance, he envisions a future where robots convey their intentions transparently, enabling humans to anticipate and respond effectively — thereby avoiding grave injury.

“Trucks beep when they back up: that’s a warning that tells everybody around ‘I’m going to back up now, so move away.’ It’s very simple, but this is the kind of thing robots need to have,” Faitelson notes.

3. Get better at cutting out bad code

There’s something close to irony in humans programming robots that are meant to stop humans from making programming mistakes.

Still, we definitely need a way to snuff out these coding errors from the get-go, before they can propagate throughout robotic systems and lead to unforeseen malfunctions and potentially hazardous outcomes.

To solve this, Faitelson proposes a shift towards mathematically sound verification techniques that can minimize programming errors and enhance the reliability and safety of robotic systems.

“We need mathematical techniques that can verify that the software is correct, and not rely just on testing,” he says. “If you only rely on testing, you always run the chance that your tests are going to miss the one scenario when the system behaves badly and kills people.”

Don’t worry about SkyNet just yet

Faitelson concludes by addressing the pervasive fear of robots taking over humanity, suggesting a stark disconnect between perception and reality.

“Perhaps the biggest danger is that we are being drawn into discussions of science fiction dangers,” he warns.

“Because people are busy discussing them, they don’t pay attention to the more mundane problems. But these mundane problems could become very dangerous if we ignore them.”

With this in mind, perhaps instead of freaking out about the latest nightmare-fuel produced by Boston Dynamics, we can all shift our attention to what really matters: convincing my toddler to please, please use the green toothbrush until Daddy can replace the pink one she knows and loves.