Taylor Swift was the latest victim. She was hardly the first, nor will she be the last.

We’re talking about deepfakes, digitally manipulated media that masterfully replace one person’s likeness for another.

Malicious individuals can create deepfake videos of celebrities, politicians–and maybe even you — saying and doing things the real person would never say or do. Think of:

- Politicians giving fiery speeches that are entirely fake (a deepfake “Joe Biden” called 40,000 voters in the recent New Hampshire primary urging them not to vote, while a deepfake Volodymyr Zelensky called on Ukrainian troops to lay down their arms).

- Celebrities supporting causes they’d run far away from in real life.

- And, in the case of Swift, her face on someone else’s body as part of a pornographic video.

Deepfakes have even invaded Zoom; the person you’re video chatting with might not be real. It was exactly that sort of Zoom deepfake that recently scammed an unnamed financial institution in Hong Kong out of $25.6 million.

Deepfakes have been a problem for several years, but the technology has gotten good enough that now even casual Internet users can create a deepfake.

And with Taylor Swift dominating the cultural conversation, hijacking her face for unmentionable activities was only a matter of time.

Why is this happening? Money, of course.

For example, ElevenLabs, an American software company employing artificial intelligence (AI) to replicate people’s voices saying things they never actually uttered, was recently valued at $1.1 billion.

“We are just at the beginning of the invasion of deceit,” says Michael Matias, CEO of Clarity.ai, an Israeli startup targeting the deepfake scourge.

Transparency counts

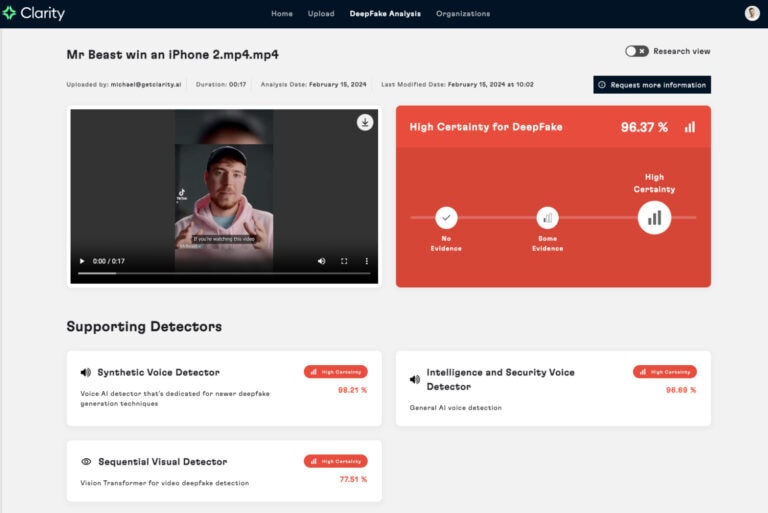

Clarity essentially reverse-engineers a possible deepfake.

“We break down the images, video and voice, and use AI to identify whether this media was created using generative AI, like ChatGPT,” Matias explains.

“We run a suite of models to determine if this is a threat. Which frame and which soundbite show an anomaly in the manipulation of information?”

Clarity creates separate models for each deepfake it finds.

“We dynamically fine-tune the AI in real-time based on what comes up,” Matias notes.

“If a model is out of date or is not relevant anymore, the system self-adapts. We don’t have to retrain 600 billion parameters – that would be too slow! The emphasis is on building an infrastructure that can automatically alert us.”

Clarity’s product is meant to be integrated into the backend systems at Internet, social media, news, government and financial organizations; it’s not a consumer tool.

If a video is discovered by Clarity to be a fake, the customer can take it down or put up a warning label that what you’re viewing was AI-generated.

“I envision a world where authentic footage and deepfake footage live side by side, both okay, but there are transparency reports,” Matias says.

Assault on democracy

Founded in 2022 with Natalie Fridman, CTO, and Gil Avrieli, COO, Clarity has about 15 employees and raised $16 million in February.

“Today, every news and media company is being exposed to deepfake content that is intended to change public perception,” laments Clarity angel investor Chris Marlin, former head of strategy for CNN.

Another Clarity angel investor is Prof. Larry Diamond, who teaches democracy studies at Stanford University, where Matias earned a BS in computer science last August.

“He would get emotional about how democracy is so critical to society and how it’s crumbling, and we need to do something about it,” Matias tells ISRAEL21c.

“We formed a special relationship. One day, I showed him deepfakes. ‘This is a billion-dollar assault on democracy,’ he told me.”

While at Stanford, Matias went to work for former Google CEO Eric Schmidt’s Innovation Endeavors Fund, where he began creating what would become Clarity.

“Every day, the rate of evolution for deepfakes increases – it’s a super-evolution. I approached different companies, governments and universities and asked, ‘How will you solve this?’ They were doing some things, but it was clear they were moving much slower than the rate of innovation on the deepfake creators’ side. A month before the public launch of ChatGPT, I decided to stop everything I was doing – including studying – to tackle this problem.”

Clarity is focused less on the scantily-clad aspect to the deepfake panic and more on how deepfakes can – and already are – affecting politics and democracy.

Matias predicts that “every consumer in the US will experience a deepfake in the context of the coming US elections before they go to vote.”

When it hits close to home

Following the October 7 massacre and kidnapping of Israelis by Hamas terrorists, Clarity has been spending a significant amount of time countering disinformation about the war.

“When it comes to negotiations and hostages and these very delicate, identity-based situations, deepfakes are a method in which you alter reality and make people think, through social engineering, that something happened that really didn’t happen,” Matias told The Times of Israel.

Videos posted on social-media platforms like TikTok can “change the public discourse of what happens,” Matias notes.

When CBS News sifted through a thousand videos submitted from people supposedly on the ground in Israel or Gaza, only 10% of those submissions were found to be genuine. The rest were either deepfakes or hijacked from other conflicts (such as images of destruction repurposed from conflicts in Syria and Iraq).

“The war has been a big catalyst for us,” says Matias. “It’s forced us to advance our technology faster than we had thought we would, to address all the media coming out of the war. The government needed the tech immediately. They couldn’t wait for months or years.”

How creative can you be?

Matias, winner of the 2023 Goldman Sachs’ “Builders and Innovators” award, served in the Israel Defense Force’s 8200 signal intelligence unit (equivalent to the US National Security Agency) prior to starting Clarity.

The war has affected him personally and professionally. “I know people who were killed at the Supernova music festival,” he says. “I have family members and employees in the [army] reserves. I’m in the reserves.”

His military background is not incidental to the company.

Matias tells ISRAEL21c that when he returned from Palo Alto to form Clarity, “I wanted to bring my friends and colleagues from the army to help us tackle this challenge. Israelis get massive experience in the army using different methodologies that few in the world get to use. We’re measured by speed, efficiency and adaptiveness.

“No day was like the day before in the IDF. You learn to become self-reliant, to know you can overcome any challenge, particularly technological ones. It’s a special mindset I haven’t seen elsewhere – the ability to trust you will solve the problem. So, then it becomes a question of just how creative you can be.”

Deepfakes are like viruses

While Clarity has competition in spotting and stopping deepfakes (Reality Defender and Sentinel have raised $15 million and $2.5 million, respectively), Matias says one of Clarity’s core differentiators is that it employs the principles of cybersecurity, treating deepfakes as “viruses” and “developing the tools to inoculate media workflows.”

The product has paying customers and is deployed in Israel and overseas. Clarity customers can choose a subscription or a pay-as-you-go plan. Clarity provides its solution at no cost to journalists to vet possible deepfakes they come across.

Could Clarity’s solution address Taylor Swift’s porn concerns?

Yes, Matias says, but as much as “porn content can ruin a person’s life, a deepfake of a presidential candidate can lead an entire society in a different direction.”

He tells ISRAEL21c that “we see ourselves dealing with both [types of] cases over time. But this year, we’ll be very focused on the elections.”

For more information, click here.